How to Scrape Job Postings : 2024 Guide, Methods & Tools

Job scraping is the process used to get available job postings automatically from websites like LinkedIn, Indeed, or company career pages. It came to be known as automated job board scraping, which could help businesses collect data on job titles and descriptions for recruitment, lead generation, or market research studies. This aids many firms other than recruitment agencies, including IT services, software development, and HR technology.

Okay, where does one even begin with job scraping? There are quite a number of ways through which job posting data is collected. You will need the right set of tools and methods to get the data that you need for your purposes.

Let's look at the best solutions to turn job data into useful information. Trust me, once you see how this works, you'll be wondering how you ever lived without it!

What is Job Scraping?

Definition and Purpose of Job Scraping

Web scraping of jobs means collecting data from job postings on the internet. It includes information regarding job boards, company career pages, and other online job portals. The advantage of job scraping is that it rapidly retrieves data and, at the same time, arranges job information into a format that is accessible and useful in many ways.

Scraping job postings entails the use of a web scraper or special computer programs in surfing through websites, finding job postings, and scraping specific details such as job titles, descriptions, locations, and salaries. This information can then be saved in a clear format so that it will be easy to access and analyze.

The objective of web scraping is to provide a comprehensive and up-to-date listing of job vacancies to :

- Generate Leads.

- Source better talent and speed up your hiring process.

- Analyze job market trends and salary benchmarks.

- Make an informed career decision based on market trends and salary data.

- Engage in market research and competitive analysis.

Job scraping also allows companies and job seekers to obtain vital information on the changing job market and remain competitive.

What is Job Postings Data (with Job Openings) and Why is it important?

What is Job Postings Data?

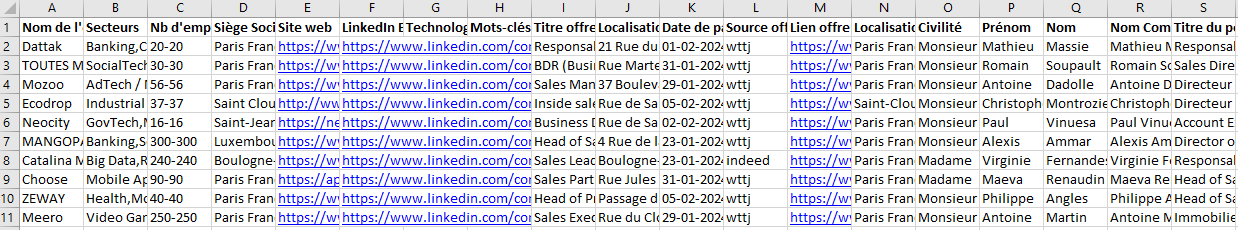

Job posting information includes the significant details that can usually be obtained in job postings; these include, among others, the details on job title, description, location, and salary, and every other important component. This information is freely available on job boards, career web pages of companies, or even specialized job aggregator websites. It reflects an overall amount of data that is public online, just waiting for extraction and putting to good use.

When businesses and individuals get a better feel and understanding of this job data, they develop the acumen needed to actually understand the job market. This could be for making better hiring decisions, or it could be for job seekers themselves as they navigate careers with more cognizance. Access to such information allows making better-informed, strategic decisions.

Job Postings Structure and Fields

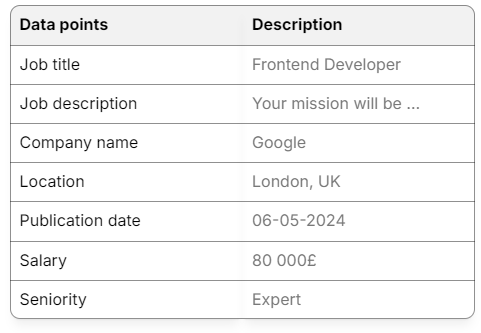

A typical job posting has the following fields:

- Job title: Job role description

- Job description: Job responsibilities and requirements

- Job posting date: Date on which the job offer was published.

- Location: Job location

- Salary: Salary or hourly rate

- Job type: Full-time, part-time or contract

- Industry: Industry or sector

- Company: The company offering the job

These fields give a complete overview of the job opportunity so job seekers can find jobs that match their skills and preferences during their job search.

Why is Scraping Job Data is Important?

For companies, scraping jobs is often to generate lead generation from the data acquired in job postings. The decision-maker has to be found, and his contact information has to be found. Job postings seldom will, in fact, name a direct contact. Though this particular information cannot be sourced directly, analysis of job trends can sometimes yield valuable insights in terms of the skills currently in demand and help formulate proper recruitment strategies.

The advantages of job scraping extend beyond lead generation:

- Job Listing Aggregator: Scrape data from various job portals and provide all in one hub.

- It saves time by automating the process of scraping, hence reducing manual work.

- Better job matching: Improve the matching of candidates with superior job opportunities.

- Quick updates: Input real-time job data and make quicker, wiser decisions.

- Competitive analysis: Keenly watch the hiring trend and strategies of competitors.

- Better recruitment: Leverage deep data insights to enhance overall recruitment efforts.

On the other hand, scraping job data is not all smooth sailing. Let's take a closer look at some common obstacles:

Challenges in Web Scraping: Data Quality, Duplicates, and Dynamic Boards

Web scraping job boards poses several challenges, including:

Scraping from job boards is not quite as easy as it may appear at first. Several problems may occur that further complicate the process:

There are lots of problems with the quality of the data, and job boards can get a little messy. Duplicates, ill-formatted data, or no data at all is a common appearance, which gives any scrapped data less reliability. You will probably have to clean and pre-process the data to assure its accuracy before use.

Anti-scraping techniques: Most job boards use tools such as CAPTCHA or block IP addresses to prevent automated scrapers from having access to their data. For this reason, most scrapers look for proxies that will enable them to continue gathering the data in question without being blocked.

Dynamic content plays an important role in many job portals using technologies like JavaScript or AJAX, which are displaying the postings. This makes things more complicated for regular scrapers, which have to render this dynamic content first before it successfully captures the data.

Fortunately, there exist methods to navigate these challenges, ensuring that the data, once scraped, remains reliable and valuable:

- Data Cleaning: After scraping, the information needs to be cleaned-up, deleting all duplicate entries, correcting format inconsistencies, and adding whatever missing information might be necessary to render data valid for analysis.

- Job boards such as scrapers use CAPTCHA and IP blocking. Nevertheless, these scrapers deftly circumvent these obstacles by rotating user agents, IPs, and cookies, allowing them to collect data without a hitch.

- Advanced Scraping Technique: Most of the job boards today use modern content like JavaScript and AJAX. They are far from basic static HTML, and their extraction demands advanced techniques in scraping. If such content is rendered, a scraper will easily capture data. Removal of such obstacles will give businesses the guarantee that they are indeed extracting accurate data, actionable into smarter decisions, and rich insights.

By addressing these challenges businesses can ensure the accuracy and reliability of their scraped data and make informed decisions and get valuable insights.

8 Most Used Job scraping methods in 2024 : Pros and Cons

Scraping job postings is a wide variety of methods and processes, really shaped by your specific needs and the type of scrapers you will decide to use. The strategy may also be very different depending on whether your target is job boards or application pages. Following are some of the most preferred ways of job scraping:

1. Manual Job Extraction

Manual job extraction is what it sounds like: laboriously rekeying jobs from job boards, one posting at a time. It is inherently a very slow and labor-intensive process, useful only for very limited-scale data extraction or when websites ban automated scraping. Clearly, this isn't something that you would want to rely on with heavy volumes of data.

But let’s be real: if you’re reading this, you’re probably looking for a way to automate this process because it’s time-consuming, tedious, and can require too many resources. We’ve all been there! Automating job scraping is a much more efficient and scalable solution for long-term success.

While manual extraction can be beneficial for certain circumstances, in the long run, automation can be the better choice for most businesses.

2. Scraping Job Posting Using Python: Programmatic From Scratch

If you are ready to deep dive into the world of automation, Python for scraping job postings is incomparable. This multifunctional programming language allows you to scrape job details from LinkedIn, Indeed, and many other job portals. In simple terms, an algorithm does just about everything a human would do: it surfs the web page for job postings and extracts necessary information like job titles, job descriptions, company names, and even URLs.

You will see how to extract and structure job data using the Python packages Requests, BeautifulSoup, and Pandas in a very customizable way.

How it works: Here is an overview of how job scraping in Python works:

- Prepare your Python environment. That means installing the main libraries that will handle HTTP requests and HTML parsing, respectively, like requests and BeautifulSoup.

- Send an HTTP request to the job board’s URL in order to retrieve the page content.

- Once you get the HTML, you can parse it and get all the information you want; that is, job descriptions, company names, links.

- Lastly, the data extracted has to be stored in a structured format for further analysis and usability, for instance, in CSV file format.

Example pseudo-code for scraping job postings:

import requests

from bs4 import BeautifulSoup

# Send a request to the job board

url = 'https://www.indeed.com/jobs?q=developer&l=New+York'

response = requests.get(url)

# Parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# Extract titles

for job in soup.find_all('h2', class_='jobTitle'):

print(job.text)This only just touches on the basics of how job scraping works in Python. Of course, there's more to overcome-organizing and displaying this information within Google Sheets or Notion, for example, or bringing it into your own systems that can make what all of this actually is pretty complicated.

Use this tools: Python, requests, BeautifulSoup...

Check out our comprehensive Step-by-Step Tutorial on how to scrape job postings using Python, complete with code examples and practical tips! Get started here!

3. Using Web Scraping APIs to scrap Job Boards

Web scraping APIs make it a heck of a lot easier to extract job posting data since you won't need to build a scraper. These APIs, otherwise known as Application Programming Interfaces, let you ask for specific details-you can request job descriptions or company information on job boards. The API then returns with the information in a nice, structured format, such as JSON or XML; this greatly speeds up the process and makes managing information much easier.

How it works: That is the about-it process: you just ask something from the API, and it returns responses with job postings you need. You won't need to build your infrastructure to scrape data or mess around with HTML; that whole process becomes easier.

That would be a great choice for companies looking to source job data in a quick and effective manner without headaches of developing their own custom scrapers.

Use this tools: Mantiks API, Zyte API, ScraperAPI (Oxylab), Octoparse API, DataMiner API, Apify, Zenscrape,...

4. Using Headless - Headful Browser Extensions

Headless browsers can automate this task by emulating a real user against job boards without anything showing on-screen. They really help in working with dynamic content, such as job descriptions loaded with JavaScript.

Contrary to this, headful browser extensions provide a friendlier option for non-developers. The users do not need to have skills in coding; they can get job data directly from web pages using an easy-to-use visual interface.

How it works: A headless browser operates by emulating the actions of a real user on job boards—navigating through pages, clicking buttons, scrolling through listings, and loading dynamic content. Remarkably, it accomplishes this without presenting any visual output; instead, it runs unobtrusively in the background, diligently extracting job data.

But unlike regular browsers, it does not show anything on the screen. It runs in the background, silently taking job posting information from the loaded content.

Use this Tools: Headless : Puppeteer, Selenium... Headful : DataMiner, Web Scraper,...

5. ChatGPT for Job Scraping

While ChatGPT can't scrape active jobs directly, it's a very useful tool for job scraping-related tasks, but with knowledge limited to 2021. A high-end language model like ChatGPT can help you create web scraping scripts and generate Python code for you and even make sense of the data from job postings after that data has been gathered.

How it works:

- You can instead ask ChatGPT to write scripts using libraries like BeautifulSoup or Selenium in Python to scrape job boards and automatically extract the details of the postings.

- You would then have to compile that information. Using ChatGPT, the clean-up and sorting can be done, rendering it in a form that is relatively quick and simple to read or use, anything from a short summary to a comprehensive report.

- Assisting with Analysis: ChatGPT can help you extract key insights from job data you input into the system, so you can analyze trends in job listings like skills in demand or salary benchmarks.

In this, by applying ChatGPT to your job data analysis, you will find some key trends in what skills are in demand and how salaries differ between listings. Then you can cross-reference this information with the recruiter's contact data.

6. Buying Job Databases (Job DataSet)

Accessing job databases of DAAS providers is a very fast and smooth way of acquiring, already pre-scraped job posting data without having to build or manage a scraping system yourself. This grants the opportunity for every business to purchase datasets comprising job title, description, company information, and many more from a wide range of job boards. This allows businesses to step away from the technical hassles of scraping.

How it works: Other service providers sell this job posting data to companies in ready-to-use form. Other job datasets involve various job platforms and are frequently updated. Companies get organized data for immediate use in hiring, market research, or studies instead of doing the scraping themselves.

Use this Tools: Mantiks, Bright Data, Datarade

7. Hiring a Web Scraping Expert (Freelancer, etc.)

Outsourcing the work of a web scraping expert for instance, a freelancer-allows the business to outsource the whole job of scraping data and receive tailored solutions. This is very useful for companies wanting special setups but without developers in their team.

8. A Fully Fledged SaaS Scraper

A full-cycle SaaS for scraping a significantly amount of data simplifies the scraping of job postings on numerous job boards. These types of tools automate everything-from data intake and sorting to managing proxies and bypassing CAPTCHAs-so that companies can easily collect data on job titles, descriptions, and companies without requiring technical expertise.

How it works: It automatically scraps job boards, extracts data from job postings, and structures it in a neat format, ready to use.

Use this Tool: Mantiks

Mantiks helps you save your time, enhance the way you reach out, and provide insights :

Scraping methods Comparison : discover the best way to extract data for you

Therefore, when you want to scrape job postings, choosing an appropriate method can be of great importance in useful job data. Your choice will depend on some factors, including cost, scalability, ease of setup, maintenance, and what type of data you want.

Here's a comparison of eight job scraping methods that help you choose what works for you:

If you’re looking for quick and efficient job data extraction, Web Scraping APIs and All-In-One SaaS Tools are the top picks, scoring 4.5/5. They handle large volumes of data with ease and require little setup or maintenance.

For those who prefer more control, Python scraping offers high customizability (4.0/5), but it does require technical skills and can be very challenging and time consuming. On the other hand, buying pre-scraped job databases gives you instant access to vast amounts of data (4.0/5), though it comes with a hefty price tag.

Where to Scrap? Job Board Scraping Data Sources

Job posting data is very important to businesses looking to understand the job market, find potential customers, and improve hiring processes. Scraping a job board allows you to get the data directly, which helps you in the discovery of trends and chances.

Here’s a quick look at the most popular data sources for job scraping.

- Indeed : Here is or detailed guide on scraping Indeed

- Glassdoor

- Welcome to the Jungle : Click here to read a full article on scraping Welcome to the Jungle

- Career Websites

- Google Jobs

- Government Job Boards

FAQ : Job Scraping Job Postings Data

Which method is the most efficient for scraping job offers across multiple platforms?

Web Scraping APIs and All-In-One SaaS Job Scraping Tools are the best as they handle large scale data with minimal setup. They are more scalable and reliable than manual scraping or building custom job scraper.

Can I combine methods for scraping ?

Yes, combining methods like Web Scraping APIs and Python scripting can optimize efficiency, scalability and data accuracy. Each method has its strengths.

Is web scraping job postings legal?

The legality depends on the website’s terms of service and local laws. While scraping public web data is generally legal, using the data without permission or violating site policies might get you legal risks.

Read this in-depth article to uncover the answer to whether job scraping is legal and how to stay compliant.

What Tools simplify Job scraping ?

Job board scrapers like Mantiks, simplify job scraping by automating the process and providing structured data with contact information.

Discover the best tools for job scraping! Check out top 10 job scraping software and solutions here: Best Job Scraping Tools & Software.

Where to find old job postings ?

Most sites, including Indeed, allow access to expired listings if you have the job ID. However, for a wider search, tools like Mantiks store historical job data and thus enable you to look up past postings using keywords or locations. Simple and effective!

For more details, visit: How to Find Old Job Postings.

About the author

Alexandre Chirié

CEO of Mantiks

Alexandre Chirié is the co-founder and CEO of Mantiks. With a strong engineering background from Centrale, Alexandre has specialized in job postings data, signal identification, and real-time job market insights. His work focuses on reducing time-to-hire and improving recruitment strategies by enabling access to critical contact information and market signals.